PixelNeRF (0.03 FPS) v.s. FWD (35.4 FPS)

IBRNet (0.27 FPS) v.s. FWD (35.4 FPS)

PixelNeRF (0.03 FPS) v.s. FWD (35.4 FPS)

IBRNet (0.27 FPS) v.s. FWD (35.4 FPS)

FWD-D (43.2 FPS) v.s. FWD (35.4 FPS)

PixelNeRF (0.03 FPS) v.s. FWD (35.4 FPS)

IBRNet (0.27 FPS) v.s. FWD (35.4 FPS)

Abstract

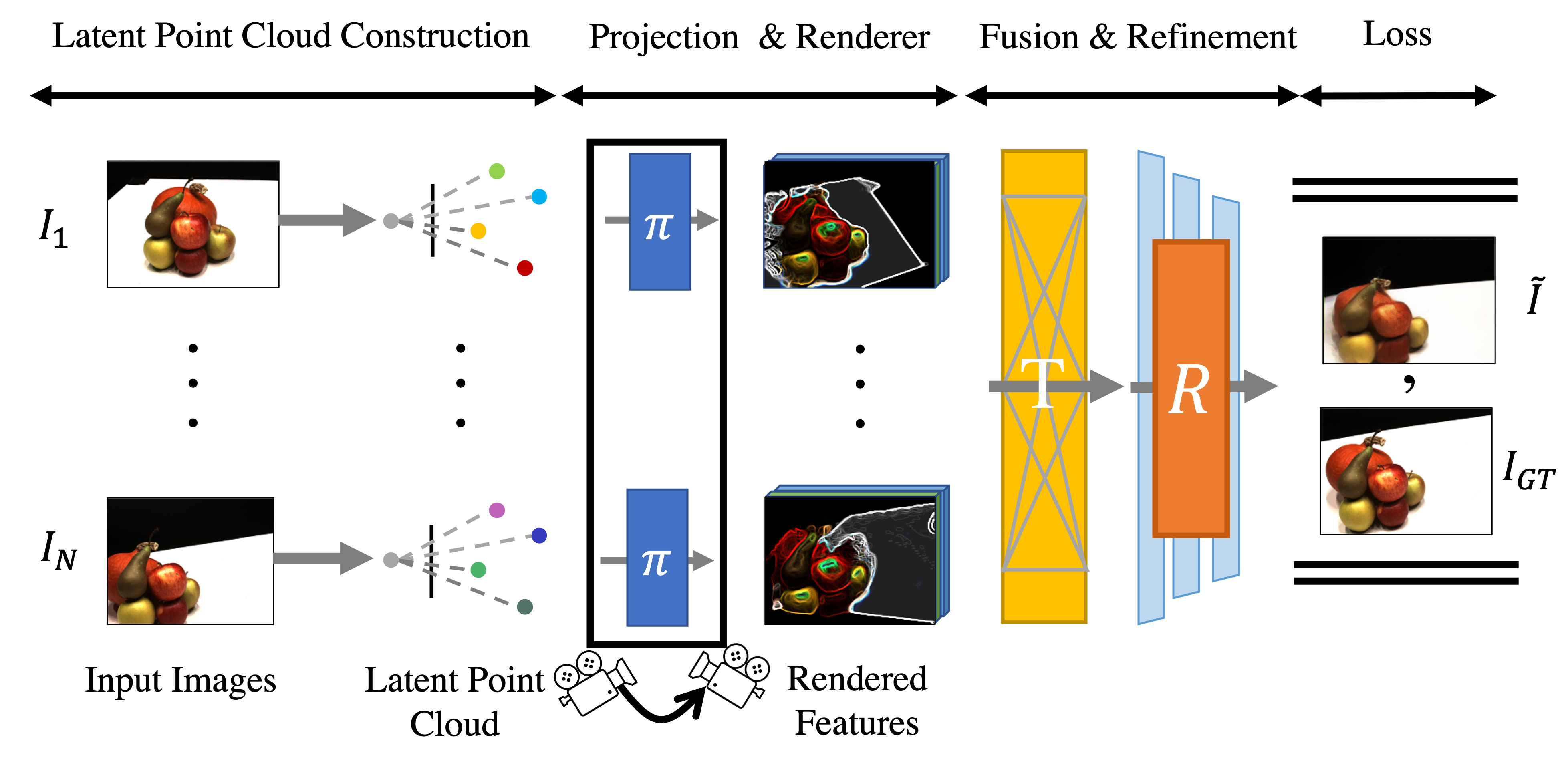

Novel view synthesis (NVS) is a challenging task requir- ing systems to generate photorealistic images of scenes from new viewpoints, where both quality and speed are important for applications. Previous image-based rendering (IBR) methods are fast, but have poor quality when input views are sparse. Recent Neural Radiance Fields (NeRF) and generalizable variants give impressive results but are not real-time. In our paper, we propose a generalizable NVS method with sparse inputs, called FWD , which gives high- quality synthesis in real-time. With explicit depth and dif- ferentiable rendering, it achieves competitive results to the SOTA methods with 130-1000× speedup and better percep- tual quality. If available, we can seamlessly integrate sen- sor depth during either training or inference to improve im- age quality while retaining real-time speed. With the grow- ing prevalence of depths sensors, we hope that methods making use of depth will become increasingly useful.

Video

BibTeX

@article{Cao2022FWD,

author = {Cao, Ang and Rockwell, Chris and Johnson, Justin},

title = {FWD: Real-time Novel View Synthesis with Forward Warping and Depth},

journal = {CVPR},

year = {2022},

}Acknowledgement

Toyota Research Institute provided funds to support this work but this article solely reflects the opinions and conclusions of its authors and not TRI or any other Toyota entity. We thank Dandan Shan, Hao Ouyang, Jiaxin Xie, Linyi Jin, Shengyi Qian for helpful discussions.